Uthsav: Lightning-Fast AI Image Search

Semantic visual search for wedding inspiration discovery using OpenAI CLIP embeddings and TypeSense vector indexing for instant, tag-free image retrieval.

Executive Summary

CyberMind Works built an AI-powered semantic image search engine for Uthsav, a modern wedding planning platform that simplifies how Indian wedding customers discover vendors and inspiration. The key challenge was enabling users to search visually across thousands of wedding images (decoration styles, photography references, bridal looks, stage setups) without relying on manual tagging or keyword-based search.

Built using OpenAI CLIP embeddings for semantic understanding and TypeSense vector indexing for lightning-fast retrieval, the system enables users to search using natural language queries like "royal mandap" or "minimal pastel theme" and receive instant, relevant image results. The platform eliminates the need for manual image tagging while delivering intent-based matching that understands concepts beyond exact keywords.

This case study explores the technical architecture, vector search implementation, and engineering decisions that enable Uthsav to deliver instant, tag-free visual discovery at scale for wedding inspiration.

Problem Statement

Manual Tagging Dependency

- Time-consuming manual labeling of thousands of wedding images

- Inconsistent tagging across different team members

- Error-prone process that misses important visual concepts

Keyword-Only Search Limitations

- Traditional search doesn't match user intent

- "Mandap decor" and "stage decoration" treated as completely different searches

- Exact keyword matching misses semantically similar content

Vocabulary Mismatch

- Users and vendors use different words for the same concept

- Great content gets missed entirely due to terminology differences

- No understanding of synonyms or related concepts

Abstract Search Queries

- Users search in abstract, emotional terms like "royal mandap" or "minimal pastel theme"

- Traditional keyword search can't handle conceptual queries

- Intent-based matching not supported

Slow Retrieval at Scale

- Large image datasets lead to slow browsing experiences

- Users get frustrated waiting for inspiration discovery

- No instant results for visual search

Solution Overview

CyberMind Works created a production-grade vector-based semantic image search engine where both images and text queries are converted into embeddings and matched based on similarity. The system combines:

- OpenAI CLIP Embeddings — Every image is converted into a semantic vector using CLIP, capturing visual meaning and concepts beyond pixel data

- Query Embedding — User text queries are converted into matching vectors in the same semantic space, enabling intent-based matching

- TypeSense Vector Indexing — Fast nearest-neighbor retrieval with in-memory indexing for instant results even at scale

- Similarity Matching — Finds the most relevant images based on semantic similarity, not keyword matching

- Tag-Free Discovery — Eliminates manual image tagging while delivering highly relevant results

This approach creates a Pinterest-style inspiration discovery experience where users can type naturally ("simple white floral theme") and the system matches the concept, not exact words. The result is instant results, higher relevance, and zero manual tagging overhead.

Technical Architecture

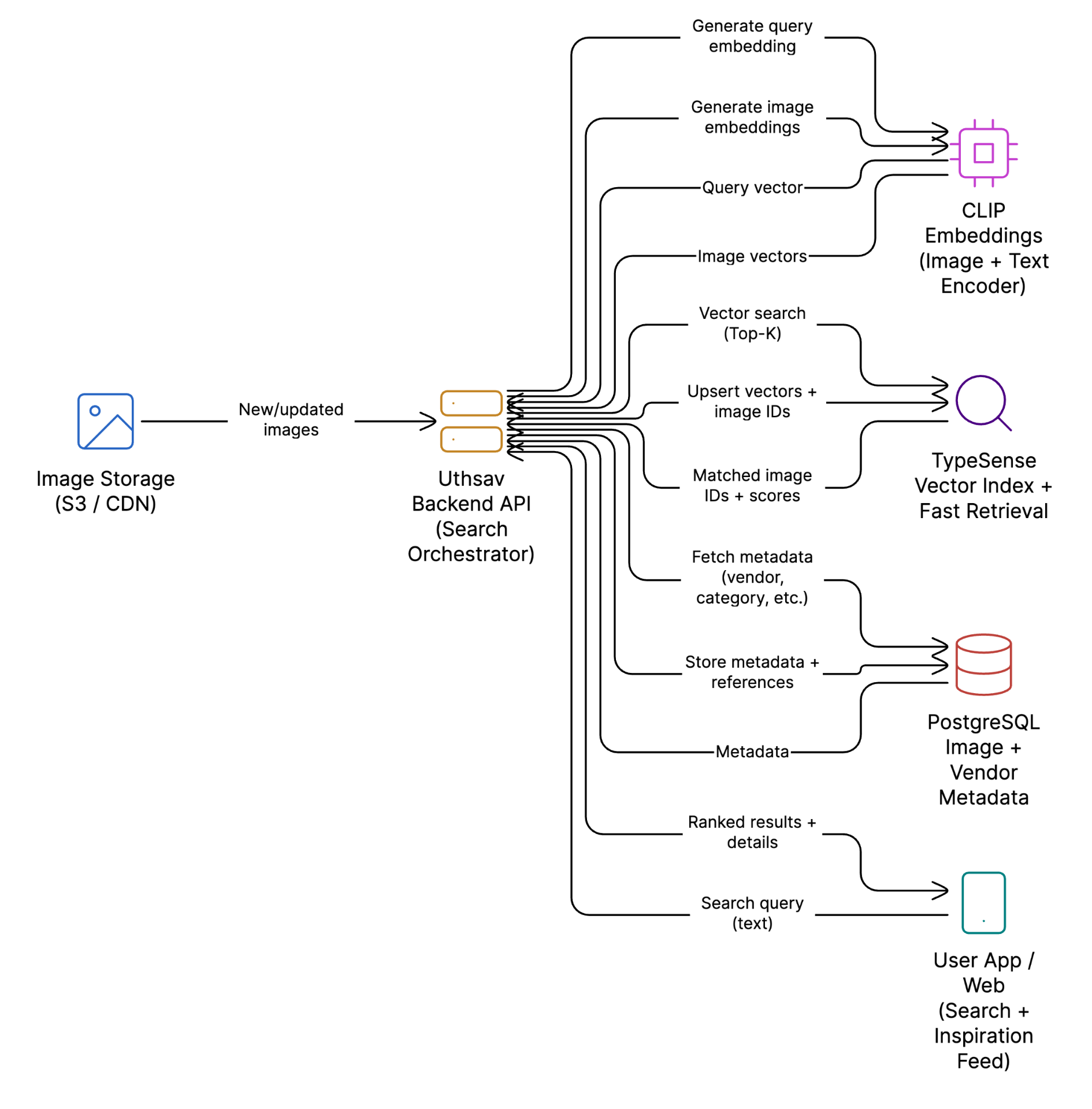

A streamlined architecture combining CLIP embeddings with TypeSense vector indexing for lightning-fast semantic search.

System Architecture

Application Layer

- Search request handling

- Query → embedding generation

- Retrieval orchestration

- Response formatting

- Vendor/gallery content mapping

AI / Embedding Layer

- OpenAI CLIP model

- Image → vector conversion

- Text → vector conversion

- Semantic space alignment

- Multi-modal understanding

Vector Storage & Retrieval

- TypeSense in-memory index

- Fast nearest-neighbor search

- Embedding + metadata storage

- Relevance-based ranking

- Scalable retrieval

Search Pipeline

How a user query becomes ranked image results in milliseconds.

Image Ingestion & Embedding

When images are uploaded to the platform:

- Images processed through CLIP model

- Converted into numerical embedding vectors

- Embeddings + metadata stored in TypeSense

- Dataset becomes 'searchable by meaning'

Query → Embedding Conversion

When a user searches (e.g., 'elegant wedding decorations'):

- Query passed to CLIP's text encoder

- Generates query embedding vector

- Vector represents semantic intent

- Same vector space as images

Vector Similarity Matching

TypeSense performs high-speed matching:

- Finds closest image embeddings

- Nearest-neighbor style retrieval

- Returns most relevant images

- Ranked by similarity score

Results & Display

The system returns:

- Image results (inspiration feed)

- Related vendor images

- Fast browsing UX

- Minimal loading time

Why Semantic Search Wins

A direct comparison between traditional keyword search and our AI-powered semantic approach.

Traditional Keyword Search

- 'Mandap decor' and 'stage decoration' treated as different searches

- Users don't know the 'right keywords' to use

- Tagging errors cause missed results

- No understanding of visual similarity

- Vocabulary mismatch between users and vendors

Semantic Vector Search

- Similar meanings cluster together automatically

- Users can type naturally ('simple white floral theme')

- System matches the concept, not exact words

- Visual similarity understood by AI

- No manual tagging dependency

Technology Stack

A focused stack optimized for semantic search performance and scalability.

AI / Embeddings

Search Engine

Application Layer

Data Storage

Outcomes & Impact

The AI image search engine significantly improved user experience and business metrics.

Search Relevance

More accurate inspiration discovery with semantic matching

Search Speed

Lightning-fast retrieval experience with TypeSense

User Engagement

Users browse more, discover more, spend more time

Vendor Visibility

Better discovery for vendors through intent-based queries

Key Engineering Wins

Conclusion

This engagement showcases CyberMind Works' ability to deliver production-ready AI image search systems built for real-world visual discovery challenges. The Uthsav AI image search feature demonstrates how OpenAI CLIP embeddings, TypeSense vector indexing, and semantic similarity matching can create a premium inspiration discovery experience for wedding planning.

By combining multi-modal embeddings that understand both images and text with fast vector search and intent-based matching, the platform enables users to search using natural language queries like "royal mandap" or "minimal pastel theme" and receive instant, relevant results. This eliminates manual tagging overhead while delivering higher relevance than traditional keyword search.

Designed for scale and performance, the system handles thousands of images with in-memory indexing, supports evolving visual content, and maintains instant retrieval speeds without compromising search quality or user experience. The result is a Pinterest-style inspiration discovery experience that makes wedding planning smoother and more exciting for users.

About Us

Portfolio

Careers

CyberMind Works LLP

10/15, K.M Towers - 1st Floor, Chakrapani Road,

Guindy, Chennai, Tamil Nadu, 600042

Copyright © 2026, CyberMind Works | All rights reserved.